All users can now access multimodal AI.

The Ray-Ban Meta smart glasses have served as a nice surprise for many who have purchased them. Their appearance is similar to that of a typical pair of sunglasses, but they are capable of producing videos, taking photographs, livestreaming, and serving as a suitable substitute for headphones. On the other hand, ever since early access testing started in January, everyone has been waiting for the integration of multimodal artificial intelligence. It is now available.

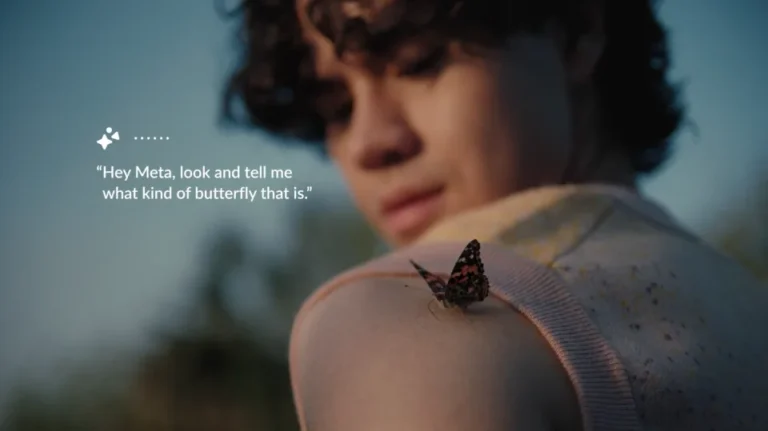

What does it mean to have multimodal AI? To put it another way, it is a collection of technologies that permits an artificial intelligence assistant to process various kinds of information, such as photographs, videos, text, and audio. This artificial intelligence is capable of observing and comprehending the world around you in real time. This is the fundamental idea that is behind Humane’s AI Pin, which has been criticised. In terms of its promises, Meta’s version is more conservative, and to tell you the truth, we were very impressed with it after our first hands-on experience.

Multimodal Meta AI is rolling out widely on Ray-Ban Meta starting today! It's a huge advancement for wearables & makes using AI more interactive & intuitive.

— Ahmad Al-Dahle (@Ahmad_Al_Dahle) April 23, 2024

Excited to share more on our multimodal work w/ Meta AI (& Llama 3), stay tuned for more updates coming soon. pic.twitter.com/DLiCVriMfk

How does it operate exactly? A camera and five microphones are built inside the glasses, which serve as the artificial intelligence’s eyes and hearing. Keeping this in mind, you might ask the glasses to provide a description of anything that captures your attention. Are you interested in learning the breed of a dog before you decide to take it in and make it your pet? Ask the glasses for something. According to Meta, it is also capable of reading signs written in a variety of languages, which is perfect for travelling. The act of exclaiming, “Hey, Meta, look at this and tell me what it says” and then hearing to it do just that was a lot of fun for us. Despite the fact that it was not available for testing, there is also a feature that can identify places of interest.

There are a few more possible use case situations, such as observing loose items on a kitchen counter and requesting that the artificial intelligence come up with a recipe that is pertinent to the situation. In spite of this, we need a few weeks of actual humans putting the technology through its paces so that we can determine what it is genuinely capable of doing. There is a good chance that real-time translation will be a game-changer app, especially for travellers; nonetheless, we can only hope that it will reduce the number of hallucinations that visitors experience. Mark Zuckerberg has demonstrated that the artificial intelligence can select clothes for him to wear, but, come on, that’s about as far as it gets when it comes to being a reality.

Multimodal artificial intelligence was not the only improvement that was introduced today for the smart glasses. Meta has announced that it would integrate hands-free video calling with both WhatsApp and Messenger systems. There are also some new frame designs available for those who are concerned about fashion. Preorders for these new models are currently being accepted, and they are able to accommodate any prescription lenses that may be required. In comparison to $700 for a cumbersome pin, the Ray-Ban Meta smart glasses start at $300, which is not a little amount of money but represents a significant improvement.