Additionally, it is enabling desktop access to wheelchair information on Google Maps.

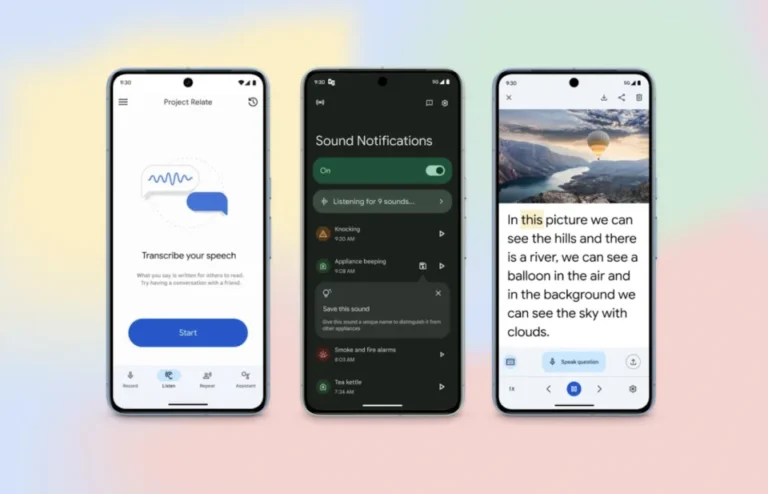

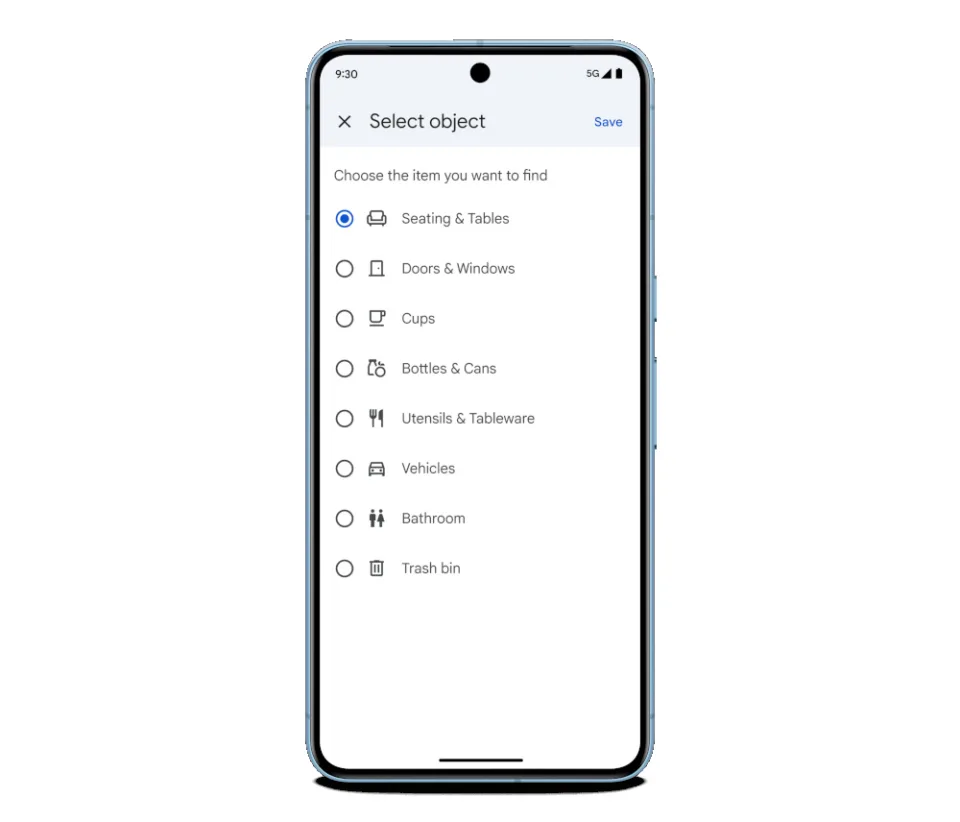

Some of Google’s accessibility apps have been updated to include new features that will make them simpler to use for individuals who have particular requirements for using them. It has released a new version of the Lookout app, which is capable of reading text and even lengthy documents out loud for individuals who have impaired vision or who are blind. It is also able to read food labels, identify different types of currencies, and provide users with information about what it sees through the camera and in a picture. A new “Find” mode has been added to the most recent version of the software, which gives users the ability to select items from seven different categories. These categories include seating, tables, vehicles, cutlery, and toilets.

When users select a category, the application will be able to identify things that are linked with that category as the user moves their camera about a room. In this way, it will make it simpler for users to engage with their environment by providing them with information regarding the direction or distance to the object. Additionally, Google has introduced an in-app capture button, which allows users to take photographs and obtain AI-generated descriptions in a short amount of time.

In addition, the company has been working to improve its Look to Speak app. Through the use of eye motions, users are able to communicate with other individuals by picking phrases from a list that they want the application to recite out loud; these sentences are then spoken by the program. Now, Google has introduced a text-free mode that provides users with the ability to activate voice by selecting from a photo book that includes a variety of emojis, symbols, and photographs. Furthermore, individuals have the ability to personalize the meaning that each symbol or image holds for them.

In addition, Google has expanded its screen reader capabilities for Lens in Maps. This allows the app to provide the user with information regarding the names and categories of the locations that it sees, such as restaurants and automated teller machines. Additionally, it is able to infer the distance between them and a specific site. Furthermore, it is currently in the process of implementing enhancements for thorough voice guidance, which is a feature that offers audio cues that inform the user of the direction in which they should proceed.

It has been four years since Google Maps was first released for Android and iOS, but the company has finally made the wheelchair information accessible on desktop computers. Using the Accessible Places feature, users are able to determine whether or not the location they are interested in visiting is able to meet their requirements. For instance, establishments and public venues that have an accessible entry will display a wheelchair emblem. It is also possible for them to use the tool to determine whether or not a facility provides accessible restrooms, seats, and parking. It has been stated by the business that Maps currently has accessibility information for more than fifty million locations. Users who like to search up information on wheelchairs on mobile devices running Android or iOS will now have the ability to easily filter reviews that are focused on wheelchair accessibility.

All of these news were presented by Google at the I/O developer conference that took place this year. Additionally, Google said that it had open-sourced additional code for the Project Gameface hands-free “mouse,” which grants Android developers permission to utilize it for their applications. Users are able to control the cursor with their head movements and facial gestures with the help of this technology, which makes it easier for them to use their computers and mobile devices.