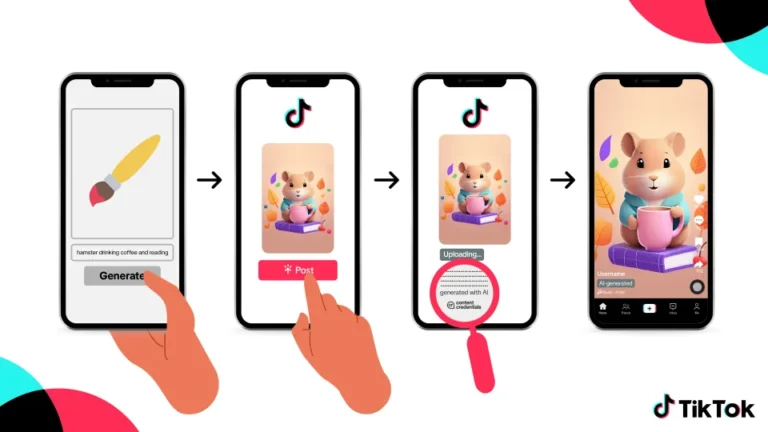

The app will get support for content credentials, which let you find out where a picture came from.

TikTok is increasing its attempts to automatically categorize content generated by artificial intelligence within its app, even if the content was made using resources provided by a third party. The business has revealed that it intends to offer content credentials, which are a type of digital watermark that signals the utilization of generative artificial intelligence.

The regulations of TikTok currently compel content creators to disclose “realistic” or artificial intelligence-generated content. However, the corporation may have a tough time enforcing this restriction, particularly in situations when creators use the artificial intelligence capabilities of other companies. However, some of these holes should be able to be filled by TikTok’s new automatic labels. This is due to the fact that content credentials are becoming widely utilized throughout the technology industry.

The term “nutrition label for digital content” is frequently used to describe content credentials. These credentials attach “tamper-evident metadata” that may identify the origins of a picture as well as the artificial intelligence techniques that were used to change it along the way. When people come across a piece of content that was created by artificial intelligence on a platform that supports the technology, they will then be able to view that history.

TikTok claims that it will be the first video platform to offer content credentials; nevertheless, it will be some time before these labels become popular because many businesses are just starting to come around to supporting the technology. The following companies have all committed to supporting content credentials: Google, Microsoft, OpenAI, and Adobe. Meta has stated that it is operating its platform in accordance with the standard in order to power labels.

On the other hand, it is important to keep in mind that content credentials and other frameworks that rely on metadata are not completely infallible. On a help page, OpenAI makes the statement that the technology “is not a silver bullet” and that metadata “can easily be removed either accidentally or intentionally.” When consumers don’t bother to read labels, they just aren’t as effective as they could be. It is stated that TikTok has a plan to deal with that as well. A number of media literacy initiatives have been launched by the company in collaboration with the fact-checking group MediaWise and the human rights organization Witness. The purpose of these efforts is to educate users of TikTok about the labeling and “potentially misleading” content generated by artificial intelligence.