Its former CEO resigned, saying that showy products had taken precedence above safety considerations.

An artificial intelligence team known as “Superalignment” was established by OpenAI during the summer of 2023 with the purpose of guiding and controlling future AI systems that might be so powerful that they could cause the extinction of humans. A little over a year later, that team has been eliminated.

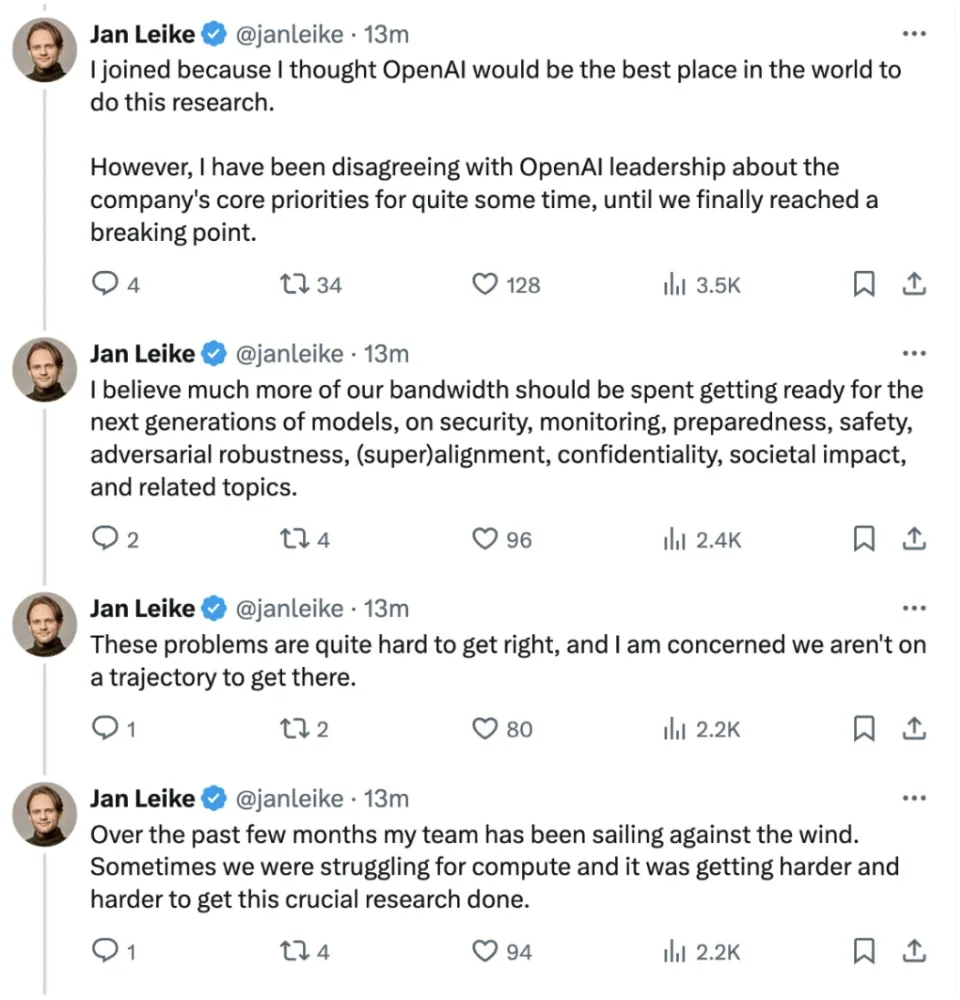

In a statement to Bloomberg, OpenAI stated that the corporation was “integrating the group more deeply across its research efforts in order to assist the company in achieving its safety goals.” It was disclosed that there were internal problems between the safety team and the wider corporation through a series of tweets that were sent out by Jan Leike, who was one of the leaders of the team and had recently resigned.

According to a statement that was published on X on Friday, Leike stated that the Superalignment team had been struggling to acquire finances in order to carry out research. According to what Leike noted, “the endeavor of building machines that are smarter than humans is inherently dangerous.” On behalf of all of humanity, OpenAI is taking on a tremendous amount of responsibility. On the other hand, throughout the course of the previous few years, safety culture and procedures have been ignored in favor of showy products. In response to a request for comment made by Newtechmania, OpenAI did not immediately provide a response.

In the early part of this week, Leike left the firm, just a few hours after OpenAI chief scientist Sutskevar made the announcement that he was quitting the organization. Furthermore, Sutskevar was not only one of the leads on the Superalignment team, but he also contributed to the establishment of the corporation. Concerns that CEO Sam Altman had not been “consistently candid” with the board of directors led to Sutskevar’s decision to step down from his position six months after he had been involved in the decision to remove Altman. An internal revolution was launched within the company as a result of Altman’s dismissal, which was all too brief. Nearly 800 employees signed a letter in which they vowed to depart if Altman was not reinstated. Following the signing of a letter by Sutskevar expressing his contrition for his acts, Altman was reinstated to his position as CEO of OpenAI for a period of five days.

OpenAI stated that it would set aside twenty percent of its computing capacity over the course of the next four years in order to solve the problem of directing large artificial intelligence systems of the future. This announcement was made in conjunction with the formation of the Superalignment team. During that time period, the business wrote that “[getting]this right is critical to achieve our mission.” According to a post that Leike made on X, the Superalignment team was “struggling for compute and it was getting harder and harder” to do extremely important research concerning the safety of artificial intelligence. In his letter, he stated, “Over the past few months, my team has been sailing against the wind.” He went on to say that he had reached “a breaking point” with OpenAI’s leadership due to disagreements on the primary principles of the company.

There has been an increase in the number of people leaving the Superalignment team over the course of the past few months. Two researchers, Leopold Aschenbrenner and Pavel Izmailov, were purportedly terminated from their positions at OpenAI in April due to allegations that they had leaked material.

John Schulman, another co-founder of OpenAI, whose research focuses on massive language models, will be in charge of the company’s future safety initiatives, according to a statement released by OpenAI to Bloomberg. If Sutskevar were to step down from his position as chief scientist, Jakub Pachocki, a director who was responsible for the construction of GPT-4, one of OpenAI’s flagship big language models, would take over.

Among the OpenAI teams that were concentrating on AI safety, Superalignment was not the only one. A whole new “preparedness” team was established by the corporation in October with the purpose of mitigating potential “catastrophic risks” posed by artificial intelligence systems. These risks include cybersecurity difficulties as well as chemical, radioactive, and biological dangers.

As of the 17th of May, 2024, at 3:28 PM Eastern Time: In response to a request for comment on Leike’s charges, a public relations representative for OpenAI pointed Engadget in the direction of a tweet from Sam Altman, in which Altman stated that he would provide a statement within the next few days.