It is said to be able to provide answers comparable to models ten times larger.

According to a new research study published by Microsoft, the company has introduced its most recent light artificial intelligence model, which is named the Phi-3 Mini and is designed to operate on smartphones and other local devices. In the near future, the company plans to deploy three small Phi-3 language models, and this particular model is the first of three. It was trained on 3.8 billion parameters. The objective is to offer a more affordable option to cloud-based LLMs, which will enable smaller enterprises to employ artificial intelligence.

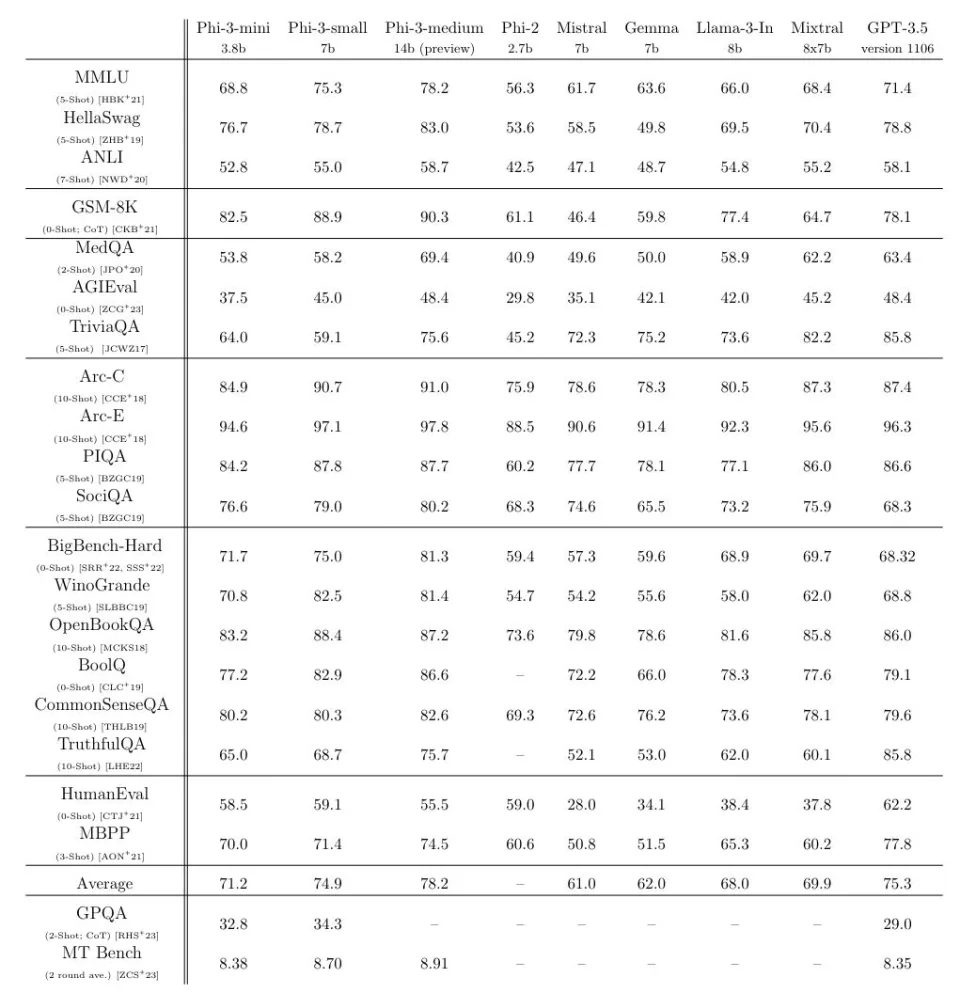

Microsoft claims that the new model exceeds the performance of its prior Phi-2 tiny model by a significant margin and is on par with larger devices such as the Llama2 model. The business claims that the Phi-3 Mini is capable of providing reactions that are comparable to those of a machine that is ten times larger than it is.

“The innovation lies entirely in our dataset for training,” the research article states. “The innovation exists in our dataset.” The Phi-2 model serves as the foundation for this dataset, which additionally makes use of “heavily filtered web data and synthetic data,” as stated by the team. However, in order to do both of those tasks, a separate LLM was utilized. This resulted in the generation of new data, which in turn made it possible for the smaller language model to function more successfully. According to The Verge, the team appeared to have been motivated by children’s books that make use of more straightforward language in order to convey more complicated ideas.

Phi-3 Mini is capable of outperforming Phi-2 and other small language models (Mistral, Gemma, and Llama-3-In) in a variety of activities, including math, programming, and academic assessments. However, it is still unable to give the same results as cloud-powered LLMs and cannot produce the same outcomes. Additionally, it is compatible with devices as simple as cellphones and does not require a connection to the internet in order to function.

Because of the limited size of the dataset, its primary restriction is the amount of “factual knowledge” it possesses; this is the reason why it does not perform well in the “TriviaQA” test. In spite of this, it ought to be useful for models such as that, which only require relatively tiny internal data sets. According to Microsoft’s aspirations, this might make it possible for businesses that are unable to purchase cloud-connected LLMs to use AI.

Azure, Hugging Face, and Ollama are now offering the Phi-3 Mini accessible for purchase. In the near future, Microsoft is planning to launch Phi-3 Small and Phi-3 Medium, both of which will have greatly increased capabilities, with 7 billion and 14 billion parameters, respectively.