It is trying out a new way to tell if a picture was made by AI.

We all believe that we are quite excellent at recognizing photos that were created by artificial intelligence. It’s the strange wording about aliens that’s in the background… Inaccuracies that are so odd that they appear to violate the rules of physics are the culprits. Those horrible hands and fingers are the things that bother me the most. The technology, on the other hand, is always advancing, and it won’t be too much longer before we won’t be able to differentiate between what is genuine and what is not. OpenAI, a leader in the industry, is working to develop a set of tools that can identify photographs that were generated by its very own DALL-E 3 generator in an effort to move ahead of the problem. The outcomes are something of a mishmash.

98 percent of the time, the company claims that it is able to reliably detect photographs that have been fabricated using DALL-3, which is an impressive capability. However, there are a few significant exception to this rule. Initially, the image must be generated by DALL-E, which is not the only image generator available on the market. However, it is currently the only one. An abundance of them can be found on the internet. The information that was provided by OpenAI indicates that the system was only able to successfully classify between five and ten percent of the images that were created by other AI models.

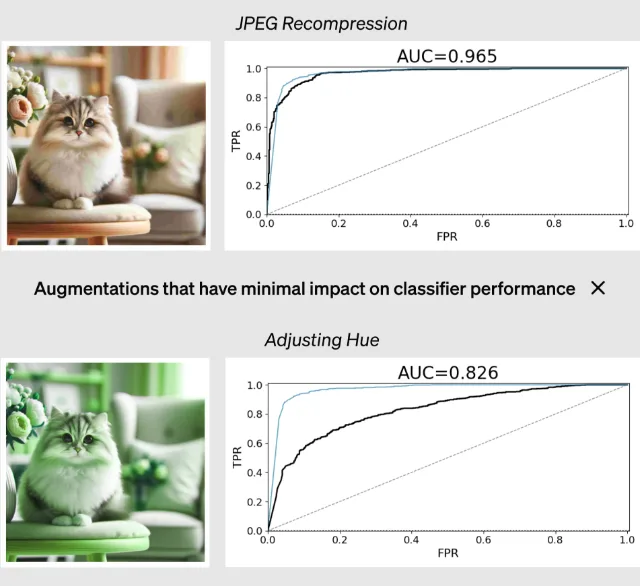

In addition, it is problematic if the image has been altered in any way when it is being used. In the case of relatively minor adjustments, such as cropping, compression, and variations in saturation, this did not appear to be a significant problem. The success rate was lower in these instances, but it was still within the acceptable range, coming in at somewhere between 95 and 97 percent. Changing the color, on the other hand, brought the percentage of successful attempts down to 82 percent.

It is at this point that things start to get pretty sticky. When employed to classify photos that had undergone more substantial alterations, the toolkit had difficulty providing accurate results. OpenAI did not even reveal the success rate in these circumstances; instead, they just stated that “other modifications, however, can reduce performance.”

In light of the fact that this is an election year, it is unfortunate that the great majority of images generated by artificial intelligence are going to be altered after the fact in order to more effectively infuriate people. To put it another way, the tool will most likely recognize a photograph of Joe Biden dozing off in the Oval Office while surrounded by baggies of white powder, but it will not recognize the image after the developer has added a bunch of angry text and Photoshopped in a sobbing bald eagle or whatever else.

At the very least, OpenAI is being forthright about the constraints that their detection method imposes. According to a report by The Wall Street Journal, it is also providing permission for external testers to use the technologies indicated above in order to assist in the resolution of these difficulties. In an effort to broaden access to artificial intelligence education and literacy, the corporation, in collaboration with its close friend Microsoft, has contributed two million dollars to a fund known as the Societal Resilience Fund.

Unfortunately, the thought of artificial intelligence (AI) tampering with an election is not a far-fetched one. There is a current occurrence of it. There have already been election advertisements and visuals that have been generated by artificial intelligence that have been utilized throughout this cycle, and it is probable that there will be a great deal more to come as we slowly, slowly, slowly (slowly) crawl toward November.