Even objects that are out of frame will be remembered by it.

At the developer conference that Google held in 2018, the company presented its Duplex voice assistant technology for the first time. The presentation was both amazing and worrying. The corporation may be bringing up the same reactions once more today, at the I/O 2024 conference, but this time it will be demonstrating yet another application of its artificial intelligence capabilities with something called Project Astra.

A video of a camera-based artificial intelligence application was uploaded to the firm’s social media platforms yesterday, and the corporation couldn’t even wait until today’s presentation to tease Project Astra. The CEO of Google’s DeepMind, Demis Hassabis, stated that his team has “always wanted to develop universal AI agents that can be helpful in everyday life.” This information was provided during the keynote presentation that took place today. The advancements made on that front have led to the creation of Project Astra.

Is Project Astra a real thing?

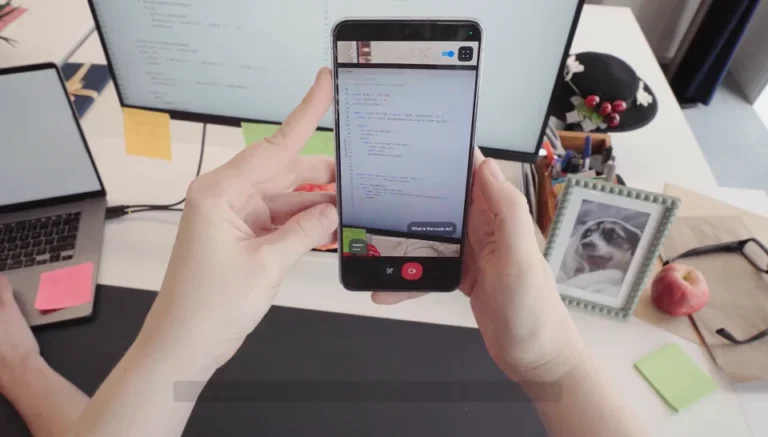

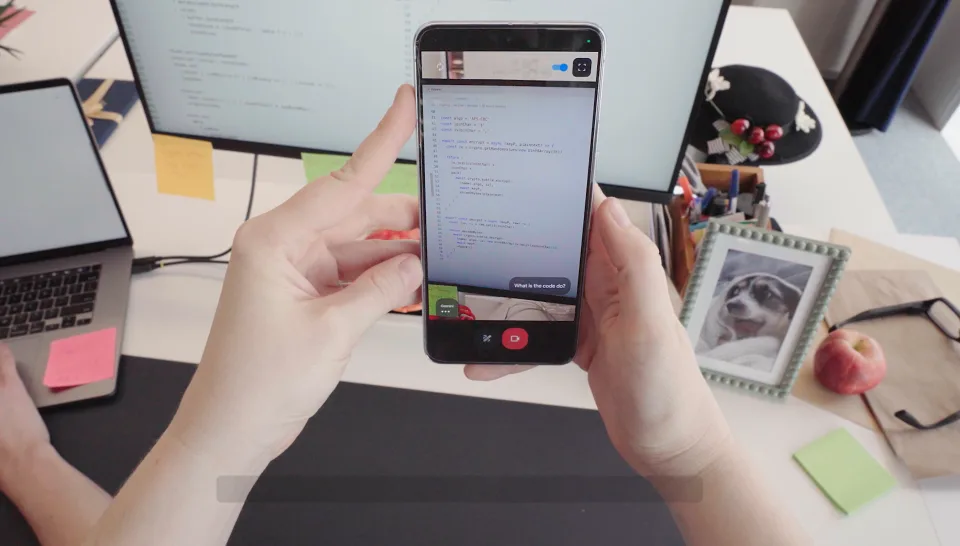

Project Astra appears to be an application that has a viewfinder as its primary user interface, as demonstrated by a video that Google presented to the media yesterday during a briefing. A person was seen holding up a phone and pointing its camera to different areas of an office. They then remarked, “Tell me when you see something that makes sound.” Gemini’s response was, “I see a speaker, which makes sound.” This was in response to the appearance of a speaker that was adjacent to a monitor.

“What is that part of the speaker called?” the person who was behind the phone asked as they paused, put an arrow on the screen to the top circle on the speaker, and then asked. The instant response from Gemini was, “That has to be the tweeter.” Sounds of a high frequency are produced by it.

Next, in the video that Google said was captured in a single take, the tester strolled over to a cup of crayons that was located farther down the table. He requested Gemini, “Give me a creative alliteration about these,” and Gemini responded by saying, “Creative crayons color cheerfully.” There is no doubt that they create vibrant works of art.

Wait a second, were those glasses from Project Astra? Does Google Glass have a comeback?

The remainder of the film demonstrates Gemini, a character from Project Astra, recognizing and explaining various sections of code displayed on a monitor. Additionally, Gemini informs the user of the area they were in based on the view that was visible through the window. The most remarkable thing about Astra’s performance was that she was able to respond to the question, “Do you remember where you saw my glasses?” despite the fact that the glasses were entirely out of frame and had not been noticed before. Gemini said, “Yes, I do,” along with the following statement: “Your glasses were on a desk near a red apple.”

Following the discovery of those glasses by Astra, the tester put them on, and the film then transitioned to portray the wearable device from the perspective of what it might seem like. The wearer’s surroundings were scanned by the glasses, which were equipped with a camera, so that they could see things like a diagram written on a whiteboard. Following that, the individual in the video posed the question, “What can I add here to make this system faster?” During the course of their conversation, a waveform showed on the screen to signify that it was listening, and as it responded, written captions presented themselves simultaneously. Astra made the observation that “Adding a cache between the server and database could improve speed.”

Following that, the examiner turned his attention to a pair of cats that were drawing on the board and asked, “What does this remind you of?” He referred to it as “Schrodinger’s cat.” The last thing they did was pick up a plush tiger toy, place it next to a gorgeous golden retriever, and then ask for “a band name for this duo.” With utmost diligence, Astra responded, “Golden stripes.”

What is the operation of Project Astra?

This indicates that not only was Astra analyzing visual input in real time, but it was also remembering what it had seen and working with a significant amount of information that had been stored. Specifically, this was accomplished, as stated by Hassabis, due to the fact that these “agents” were “designed to process information faster by continuously encoding video frames, combining the video and speech input into a timeline of events, and caching this information for efficient recall.”

Additionally, it was important to take note of the fact that, at least in the video, Astra was responding promptly. Hassabis pointed out in a recent blog post that “While we’ve made incredible progress developing AI systems that can understand multimodal information, getting response time down to something conversational is a difficult engineering challenge.”

Through the use of its speech models, Google has “enhanced how they sound, giving the agents a wider range of intonations.” This is another area of focus for the company as it works to improve the vocal expression capabilities of its artificial intelligence. It is evocative of Duplex’s pauses and utterances, which caused people to believe that Google’s artificial intelligence would be a contender for the Turing test. This kind of replication of human expressiveness in responses brought back memories.

In what time frame will Project Astra be accessible?

Hassabis noted that in the future, these assistants might be accessible “through your phone or glasses.” This is despite the fact that Astra is still an early feature and there are no obvious plans for its subsequent launch. Hassabis did write that “some of these capabilities are coming to Google products, like the Gemini app, later this year.” However, there is no information available at this time regarding whether or not those glasses are now a product or whether they are the replacement to Google Glass.