MGIE interprets your statements using multimodal large language models (MLLMs).

Although Apple is not currently among the most prominent players in the artificial intelligence (AI) game, the business has demonstrated what it is capable of contributing to the field by releasing a new open source AI model for image editing. The approach known as MLLM-Guided picture Editing (MGIE), which use multimodal large language models (MLLMs) to comprehend text-based commands in the process of picture manipulation. To put it another way, the application is able to modify photographs in accordance with the kind of text that the user enters. The project’s paper (PDF) states that “human instructions are sometimes too brief for current methods to capture and follow,” despite the fact that this is not the first instrument that is capable of acting in this manner.

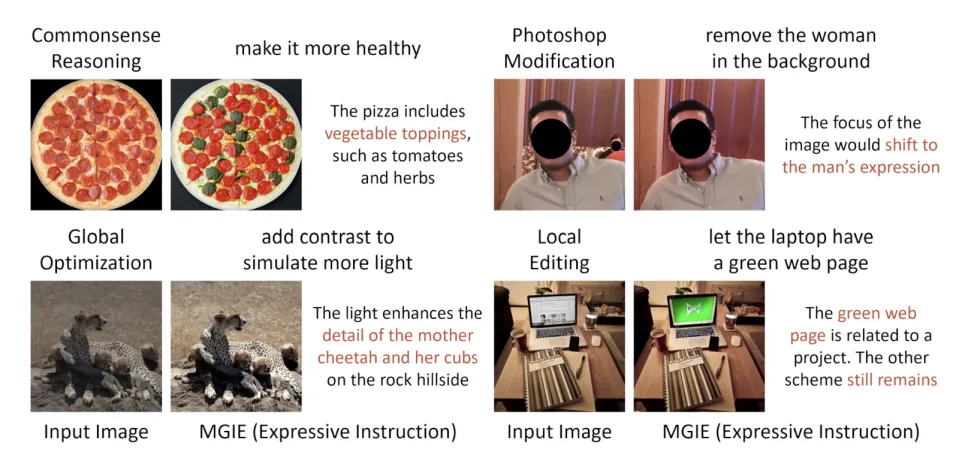

Over the course of collaboration with experts from the University of California, Santa Barbara, the business developed MGIE. MLLMs have the ability to translate written prompts that are either straightforward or unclear into directions that are more specific and easy to understand for the picture editor itself to follow. For example, if a user wants to alter a photo of a pepperoni pizza in order to “make it more healthy,” MLLMs can understand the user’s request as “add vegetable toppings” and edit the photo accordingly.

MGIE is capable of making significant modifications to images, as well as cropping, resizing, and rotating photos, as well as improving the brightness, contrast, and color balance of the image. All of these capabilities are accomplished with the use of text prompts. It is also capable of editing particular regions of a photograph, such as modifying the hair, eyes, and clothing of a person depicted in the photograph, or removing objects from the background of the photograph.

According to VentureBeat, Apple made the model available for download on GitHub; however, individuals who are interested can also test out a demonstration video that is now featured on Hugging Face Spaces. There has been no announcement made by Apple regarding whether or not it intends to combine the knowledge gained from this initiative into a tool or a feature that can be incorporated into any of its products.